Song Han is an associate professor at MIT EECS. He received his PhD degree from Stanford University. He proposed the “Deep Compression” technique including pruning and quantization that is widely used for efficient AI computing, and “Efficient Inference Engine” that first brought weight sparsity to modern AI chips, making it one of the top-5 most cited papers in the 50-year history of ISCA. He pioneered the TinyML research that brings deep learning to IoT devices, enabling learning on the edge. His team’s work on hardware-aware neural architecture search (once-for-all network) enables users to design, optimize, shrink and deploy AI models to resource-constrained hardware devices, receiving the first place in many low-power computer vision contests in flagship AI conferences. His team’s recent work on large language model quantization/acceleration (SmoothQuant, AWQ, StreamingLLM) has effectively improved the efficiency of LLM inference, adopted by NVIDIA TensorRT-LLM. Song received best paper awards at ICLR and FPGA, faculty awards from Amazon, Facebook, NVIDIA, Samsung and SONY. Song was named “35 Innovators Under 35” by MIT Technology Review for his contribution on “deep compression” technique that “lets powerful artificial intelligence (AI) programs run more efficiently on low-power mobile devices.” Song received the NSF CAREER Award for “efficient algorithms and hardware for accelerated machine learning”, IEEE “AIs 10 to Watch: The Future of AI” award, and Sloan Research Fellowship. Song’s research in efficient AI computing has witnessed successful commercialization and influenced the industry. He was the cofounder of DeePhi (now part of AMD), and cofounder of OmniML (now part of NVIDIA). Song developed the EfficientML.ai course to disseminate efficient ML research.

Recent work: accelerating LLM and Generative AI [slides]

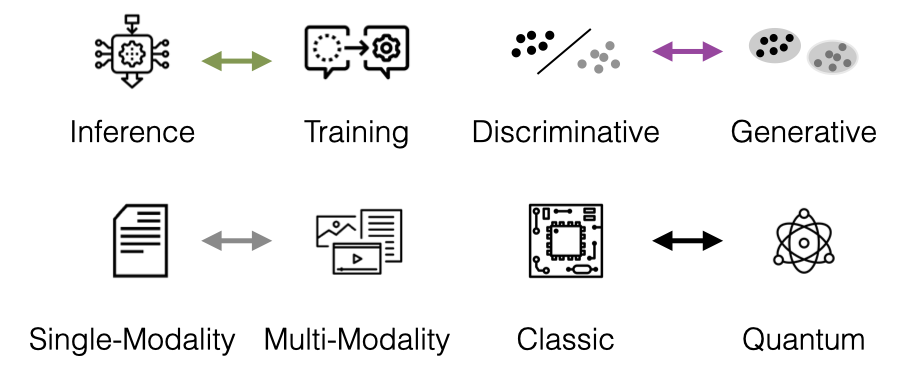

Generative AI models are significantly larger (>1000x) than traditional predictive AI, presenting new computational challenges. We innovated in key areas of quantization, parallelization, KV cache optimization, long-context learning, and multi-modal representation learning to minimize GenAI costs.

I pioneered the area of model compression that can shrink neural networks by >10x without hurting accuracy. By pruning, quantization, neural architecture search, we can fit neural networks in micro-controllers (MCUs). We also enable on-device training with 1000x less memory on MCUs.

Sparsity in neural networks arises where not all neurons are connected. I designed the first hardware accelerator EIE to exploit weight sparsity. I identify new sources of sparsity in modern AI: sparse attention, token pruning, point cloud, and implement efficient systems and accelerators to efficiently exploit sparsity.

Tuesday/Thursday 3:35-5:00pm Eastern Time

Our efficient ML research has influenced and landed in many industry products, thanks to the close collaboration with our sponsors: Intel OpenVino, Intel Neural Compressor, Apple Neural Engine, NVIDIA Sparse Tensor Core, NVIDIA FasterTransformer, AMD-Xilinx Vitis AI, Qualcomm AI Model Efficiency Toolkit (AIMET), Amazon AutoGluon, Microsoft NNI, SONY Neural Architecture Search Library, SONY Model Compression Toolkit, ADI MAX78000/MAX78002 Model Training and Synthesis Tool, Ford Trailer Backup Assist.

We show SmoothQuant can enable W8A8 quantization for Llama-1/2, Falcon, Mistral, and Mixtral models with negligible loss.

We supported VILA Vision Languague Models in AWQ & TinyChat! Check our latest demos with multi-image inputs!

Congrats Ji Lin completed and defended his PhD thesis: "Efficient Deep Learning Computing: From TinyML to Large Language Model". Ji joined OpenAI after graduation.

AWQ is integrate by NVIDIA TensorRT-LLM, can fit Falcon-180B on a single H200GPU with INT4 AWQ, and 6.7x faster Llama-70B over A100.

Attention Sinks, an library from community enables StreamingLLM on more Huggingface LLMs. blog.

Email: FirstnameLastname [at] mit [dot] edu

Office: 38-344. I’m fortunate to be at Prof. Paul Penfield and Prof. Paul E. Grey's former office.

If you work on efficient LLM, VLM, GenAI and are interested in joining my lab, please fill in the recruiting form. I do not reply inquiry emails if the recruiting form is incomplete.

PhD applicants: select "ML+System" track in the MIT PhD application system.